The Impact of AI on CO₂ Emission

AI often feels weightless, but behind every prompt runs a network of servers that consumes energy and emits CO₂. How big is that impact really? Is it something to worry about? And what does it mean for you as a user?

Reading time: 5 minutes

The Impact of AI on CO₂ Emission

AI often feels weightless: you type a question into ChatGPT (or any other model), and within seconds an answer appears. But behind that screen runs a vast network of servers that consumes energy – and therefore emits CO₂.

How big is that impact really? And what does it mean for you as a user?

Training vs. Usage: Where Does the Impact Lie?

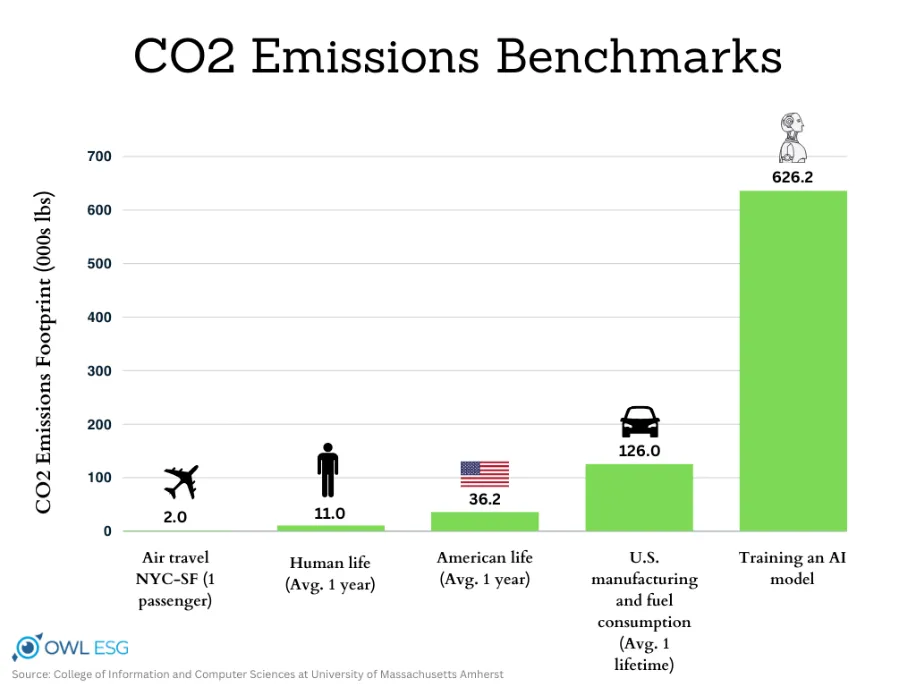

Many people assume that the biggest emissions come from training AI models. And that’s true: the training of GPT-3 is estimated to have generated hundreds of tons of CO₂-equivalent, depending on the energy mix used (Carbon Footprint of AI Data Centers).

But that’s not the whole story. With millions of people now sending prompts every day, it turns out that usage (inference) may represent the larger share of emissions. According to ScienceNews, this could eventually outweigh the training itself.

One Prompt: How Much CO₂ Does It Cost?

A recent analysis How Hungry is AI? estimated that a single short GPT-4 prompt consumes about 0.43 Wh of electricity. Converted, this equals roughly 0.17 grams of CO₂ per prompt with the average European energy mix.

That may sound small. But what does it look like in practice?

Comparisons to Make It Tangible

- 🚗 Car ride: Driving 1 km emits ~150 g CO₂. That equals almost 1,000 prompts.

- 📱 Charging a smartphone: One full charge takes ~5 Wh, or about 2 g CO₂. That’s roughly 12 prompts.

- ☕ Cup of coffee: On average ~100 g CO₂. Equivalent to more than 500 prompts.

In other words: your individual prompt doesn’t weigh much. But at billions of prompts per day, it adds up quickly.

And What About ChatGPT-5?

At this point, there are no peer-reviewed studies that measure the energy use of ChatGPT-5 in detail. OpenAI itself has not released figures either.

However, early analyst reports and media articles offer estimates. DataCenterDynamics reported that a single GPT-5 prompt could average around 18 Wh, with peaks of up to 40 Wh. That’s significantly higher than the ~0.4 Wh recently measured for GPT-4 prompts (How Hungry is AI?). See also arxiv.org.

What we learn from this:

- The larger and more complex the model – and the more detailed the task – the higher the energy use.

- Simple questions require relatively little computing power.

- Long or multimodal prompts are much heavier.

This makes it clear that user behavior also plays a role in the overall CO₂ impact.

Can AI Also Help Reduce CO₂?

Interestingly, AI – if applied wisely – can actually contribute to CO₂ reduction.

In a study (Can artificial intelligence technology reduce carbon emissions?), researchers found that countries or regions with more AI innovation report lower average CO₂ emissions. Patent analyses suggest that AI-driven innovation often leads to more efficient processes and reduced energy use.

This indicates that AI is not only part of the problem but can also be part of the solution.

What Can You Do Yourself?

- Use AI mindfully: bundle questions instead of sending 10 separate prompts.

- Choose sustainable providers: some companies run their data centers (partly) on renewable energy.

- Acknowledge the scale: one prompt is small, but collectively it adds up.

Conclusion

AI helps us work smarter and more efficiently, but it is not free for the climate. Individually, a prompt amounts to only fractions of a gram of CO₂ – but at a global scale, the calculation becomes significant.

Using prompts responsibly and choosing sustainable tools can make a difference. And when applied strategically, AI can even contribute to reducing emissions.

👉 Curious how AI can make your business both smarter and more sustainable? Discover more on our website.